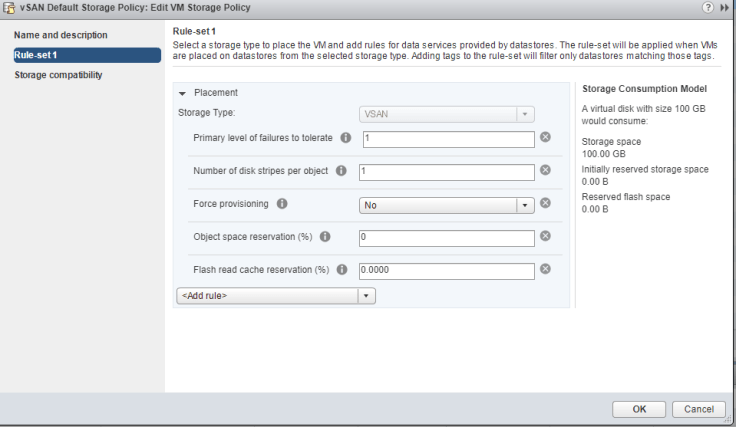

I get a lot of questions about vSAN and its storage policies. “What exactly does FTT mean?”, “What should I set the stripe to?”. The default storage policy with vSAN is FTT=1 and Stripe=1. FTT means Failures To Tolerate. Stripe is how many drives an object is written across.

FTT=1 in a 2 node configuration results in mirror of all data. You can lose one drive or one node which results in 200% storage usage. In a 4 node or larger configuration it gives you RAID 5 which is data being distributed across nodes with a parity of 1.

FTT=2 requires 6 nodes and you can lose 2 drives or 2 nodes. This is accomplished through using RAID 6 which is parity of 2, and results in 150% storage usage.

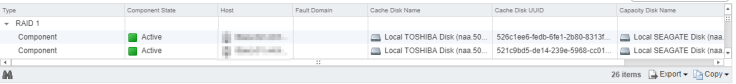

If you want to check the status go to Cluster > Monitor > vSAN > Virtual Objects. From here you can see the FTT and what disks it involves. From the picture you can see with the 2 node vSAN cluster the objects are on both nodes resulting in RAID 1 or mirroring.

Now lets break down which each setting is.

Striping breaks apart an object to be written across multiple disks. In a all flash environment there is still one cache drive per disk group, but it is used just to cache writes. The rest of the drives are use for reads. In a hybrid configuration reads are cached on the SSD, but if that data is not on the disk it will then be retrieved from the slower disks. This will result in slower performance, but by having the object broken apart, and written across multiple disks it can result in increased read performance. I would recommend leaving the stripe at 1 unless you encounter any performance issues. The largest size an object can be is 255GB. If it grows beyond that size it will be broken up into multiple objects across multiple disks.

Force provisioning allows an object to be provisioned on a datastore even if it is not capable of meeting the storage policy. Such as you have it set for FTT=2, but the cluster is only 4 nodes so its only capable of FTT=1.

Object Space Reservation controls how much of an object is thick provisioned. By default all storage is thin provisioned with vSAN. You can change this by increasing the percentage. If you set it to 100% then the object will be thick provisoined. You can set it anywhere between 0%-100%. The only caveats are with deduplication and compression its either 0% or 100%. By default the page file is 100%, but there is a command line setting you can change if you need to save this space.

Flash Read Cache reserves the amount of cache you want reserved for objects. The max amount of storage the cache drive can use is 800GB. If you have have 80 VM’s each with 100GB in storage then the entire cache drive storage is used. When you power on the 81st VM the cache drive will not be able to give that VM any read cache. That is why its best to not change the default unless you have a technical reason to.

.

.

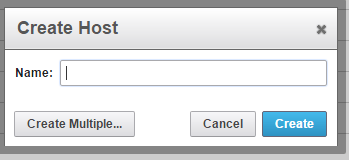

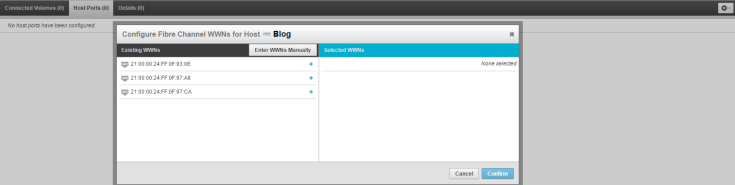

4. Now if you are running vCenter Cody Hosterman has a great Powershell script that will import all your Host and WWN for you. If not here is were we enter the WWN. Click on Host Ports and then click the gear on the right side, and select configure WWN. From here if you already zoned in the storage you will see your WWN; if not you can go ahead and enter them manually.

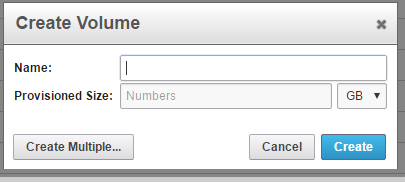

4. Now if you are running vCenter Cody Hosterman has a great Powershell script that will import all your Host and WWN for you. If not here is were we enter the WWN. Click on Host Ports and then click the gear on the right side, and select configure WWN. From here if you already zoned in the storage you will see your WWN; if not you can go ahead and enter them manually. 5. Now that your Host is created and zoned in its time to create the Volume and connect it to the host. Click Volumes + sign at the bottom left and the screen, and enter the size Volume you want.

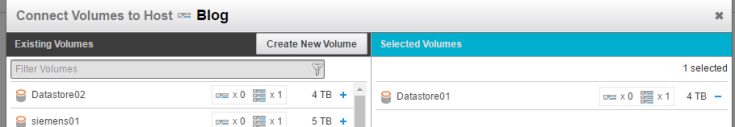

5. Now that your Host is created and zoned in its time to create the Volume and connect it to the host. Click Volumes + sign at the bottom left and the screen, and enter the size Volume you want.

Recent Comments